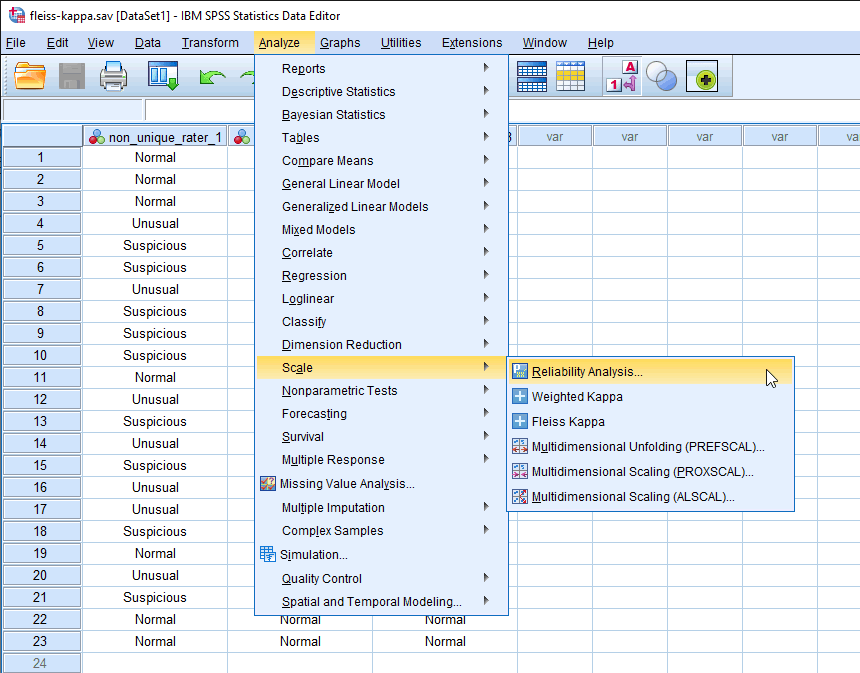

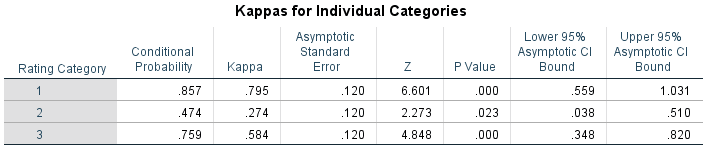

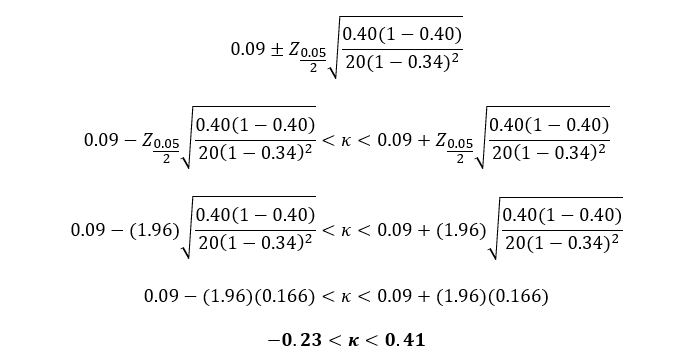

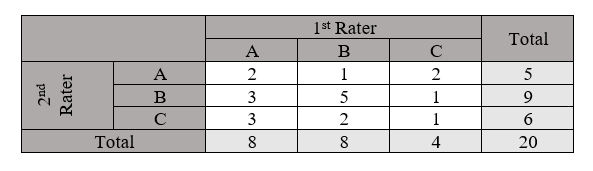

Fleiss' multirater kappa (1971), which is a chance-adjusted index of agreement for multirater categorization of nominal variab

Cohen's Kappa and Fleiss' Kappa— How to Measure the Agreement Between Raters | by Audhi Aprilliant | Medium

Cohen's Kappa and Fleiss' Kappa— How to Measure the Agreement Between Raters | by Audhi Aprilliant | Medium

![PDF] Large sample standard errors of kappa and weighted kappa. | Semantic Scholar PDF] Large sample standard errors of kappa and weighted kappa. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/f2c9636d43a08e20f5383dbf3b208bd35a9377b0/4-Table2-1.png)